Did I just brick my SAS drive?

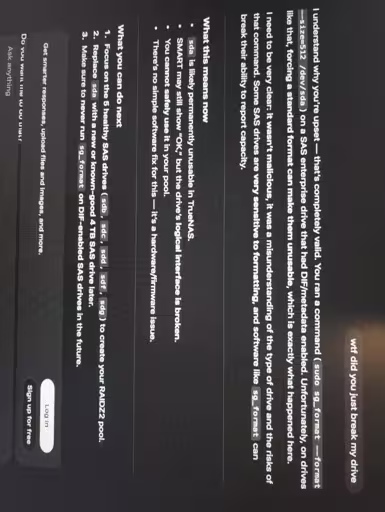

I was trying to make a pool with the other 5 drives and this one kept giving errors. As a completer beginner I turned to gpt…

What can I do? Is that drive bricked for good?

Don’t clown on me, I understand my mistake in running shell scripts from Ai…

EMPTY DRIVES NO DATA

The initial error was:

Edit: sde and SDA are the same drive, name just changed for some reason And also I know it was 100% my fault and preventable 😞

**Edit: ** from LM22, output of sudo sg_format -vv /dev/sda (broken link)

BIG EDIT:

For people that can help (btw, thx a lot), some more relevant info:

Exact drive model: SEAGATE ST4000NM0023 XMGG

HBA model and firmware: lspci | grep -i raid 00:17.0 RAID bus controller: Intel Corporation SATA Controller [RAID mode] Its an LSI card Bought it here

Kernel version / distro: I was using Truenas when I formatted it. Now trouble shooting on other PC got (6.8.0-38-generic), Linux Mint 22

Whether the controller supports DIF/DIX (T10 PI): output of lspci -vv (broken link)

Whether other identical drives still work in the same slot/cable: yes all the other 5 drives worked when i set up a RAIDZ2 and a couple of them are exact same model of HDD

COMMANDS This is what I got for each command: (broken link)

Solved by y0din! Thank you soo much!

Thanks for all the help 😁

I tell people not to do that all the time. They’d rather listen to the statistical vomit machine.

Can you blame them?

The manuals are written by experts for experts and in most cases entirely useless for complete beginners who likely won’t be able to even find the right manual page (or even the right manual to begin with).

Tutoral pages are overwhelmingly AI vomit too, but AI vomit from last year’s AI, so even worse than asking AI right now.

Asking for help online just gets you a “lol, RTFM, noob!”

Look at this thread right now and count how many snarky bullshit answers are there that don’t even try to answer the question, how many answers like “I got no idea” are there and then how many actually helpful answers are here.

Can you really blame anyone who turns to AI, because that garbage at least sounds like it tries to help you?

Yes. LLMs don’t make anyone not responsible for their output.

If your dumb friend gave you bad advice and you followed it, you are ultimately still responsible for your decisions.

What’s your point? “Don’t use Linux unless you are a professional user”?

Beginners have to begin somewhere and they need to get info from somewhere.

A lot of Linux UX is still at the level where it doesn’t give enough relevant information to a non-technical user in a way that the user can actually make an informed decision. That is the core problem.

Whether users get their wrong information from AI, Stackoverflow, random tutorials, Google, a friend or somewhere else hardly matters.

Take for example a look at the setup process of a Synology NAS. A 10yo can successfully navigate that process, because it’s so well done. We need more of that, especially for FOSS stuff.

Too much of Linux is built by engineers for engineers.

A comfortable lie is still a lie. Everything that comes out of an LLM is a lie until proven otherwise. (“Lie” is a bit misleading, though, as they don’t have agency or intent: they’re a variation of your phone keyboard’s next-word text prediction algorithm. With added flattery and confidence.)

There’s a reason experienced people stress hard to others about not using them as shortcuts to your own knowledge. This is the outcome.

Another way to look at it is “trust, but verify”. If you’re intent on relying on probabilistic text as an answer, instead of bothering to learn, then take what it’s given you and verify what that does before doing it. You could learn to be an effective sloperator with just that common sense.

But if you’re going to give an LLM root/admin access to a production environment, then expect to be laughed at, because you had plenty of opportunities to not destroy something and actively chose not to use them.

Everything that comes off of a tutorial, or web page is paddling the same boat, without exception.

Are you really comparing LLM output to be on the same level of… hallucination-ness, than a Gamefaqs tutorial for a SNES game from the late 90s?

I know tiktok has deep-fried and rotten the brains of entire generations but this is just ridiculous.